A confidence interval provides an upper and lower bound around a point estimate by quantifying the variability present in the estimate. The general form of a confidence interval is the:

Point estimate +/- confidence level * standard error

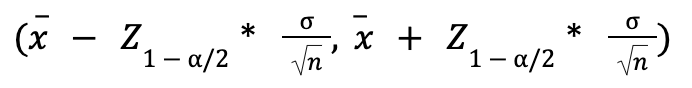

The confidence level is found from the quantile of the distribution the data is believed to be generated from, and the standard error is a measure of the spread of the sample data. As a common task in statistics is to estimate the mean of a population, a confidence interval for the mean parameter could be found by:

where ![]() is the sample mean,

is the sample mean, ![]() refers to the quantile of the normal distribution based on the chosen significance level ?, ? refers to the standard deviation of the population (assumed to be known), and n is the sample size of the data collected.

refers to the quantile of the normal distribution based on the chosen significance level ?, ? refers to the standard deviation of the population (assumed to be known), and n is the sample size of the data collected.

In a classical paradigm, the precise interpretation of a confidence interval (e.g. 95% CI) is that if the data generation process was repeated 100 times, 95% of the confidence intervals created from the samples would capture the actual value of the population parameter. A wider confidence interval indicates less precision in the point estimate, where a narrower confidence interval implies greater precision of the estimate. All else held equal, a larger sample size will reduce the width of the interval, as will a smaller standard deviation.