Activation functions transform a linear combination of weights and biases into an output that has the ability to learn part of a complex function at each node of a network. The most basic activation function is the linear one, which is simply a weighted combination of the weights and biases fed into a given node. No matter how many layers or units present in the network, using a linear activation function at each node is nothing more than a standard linear model. However, much of the power of Neural Networks is derived from using nonlinear activation functions at each node.

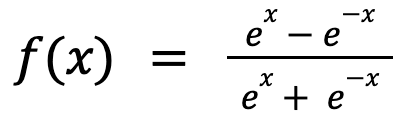

TanH is one such non-linear activation function. The hyperbolic tangent function works similar to the sigmoid but outputs values in the range of [-1, 1]. For a long time, the TanH was considered an acceptable default activation function for hidden layers of a network and is still commonly used in Recurrent Neural Networks.