Simply put,

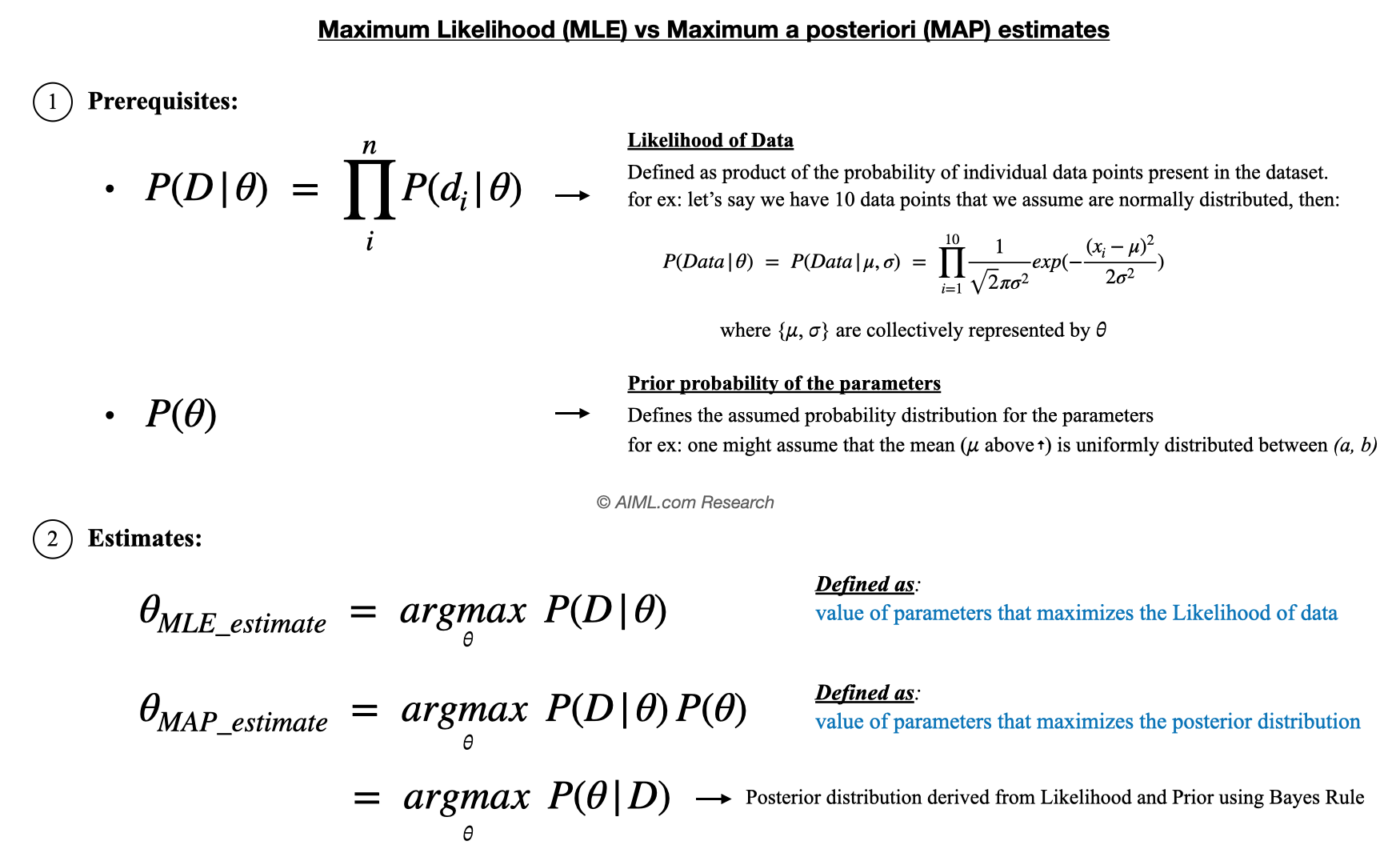

- MLE: estimates of parameters that maximizes the likelihood of data

- MAP: estimates of parameters that maximizes the posterior probability

The following figure explains this in more detail:

MLE vs MAP explained using an Example

Estimating the Bias of a Coin: Suppose we have a coin, and we want to estimate the probability (p) that it lands heads up. We don’t know if the coin is fair, so p could be any value between 0 (always tails) and 1 (always heads).

Let’s assume, we flip a coin 10 times and observe the following outcomes: 7 heads and 3 tails. Then the MLE and MAP estimates would be calculated as follows:

[table id=25 /]To conclude, MLE is a frequentist approach focusing solely on the observed data, while MAP is a Bayesian approach that combines data with prior beliefs. The choice between them depends on the specific context, the amount of data available, and whether incorporating prior knowledge is deemed important.

Video Explanation

- In the following video, Prof. Jeff Miller aka. MathematicalMonk explains the differences between MLE and MAP estimates. Even though the video is titled MAP estimates, the video also explains MLE estimates, and contrasts it with MAP estimates.