Related articles:

– Explain the basic architecture of a Neural Network, model training and key hyper-parameters

– What is an activation function? What are the different types? Discuss their pros and cons

– Top 20 Deep Learning Interview Questions with detailed Answers (All free)

Backpropagation

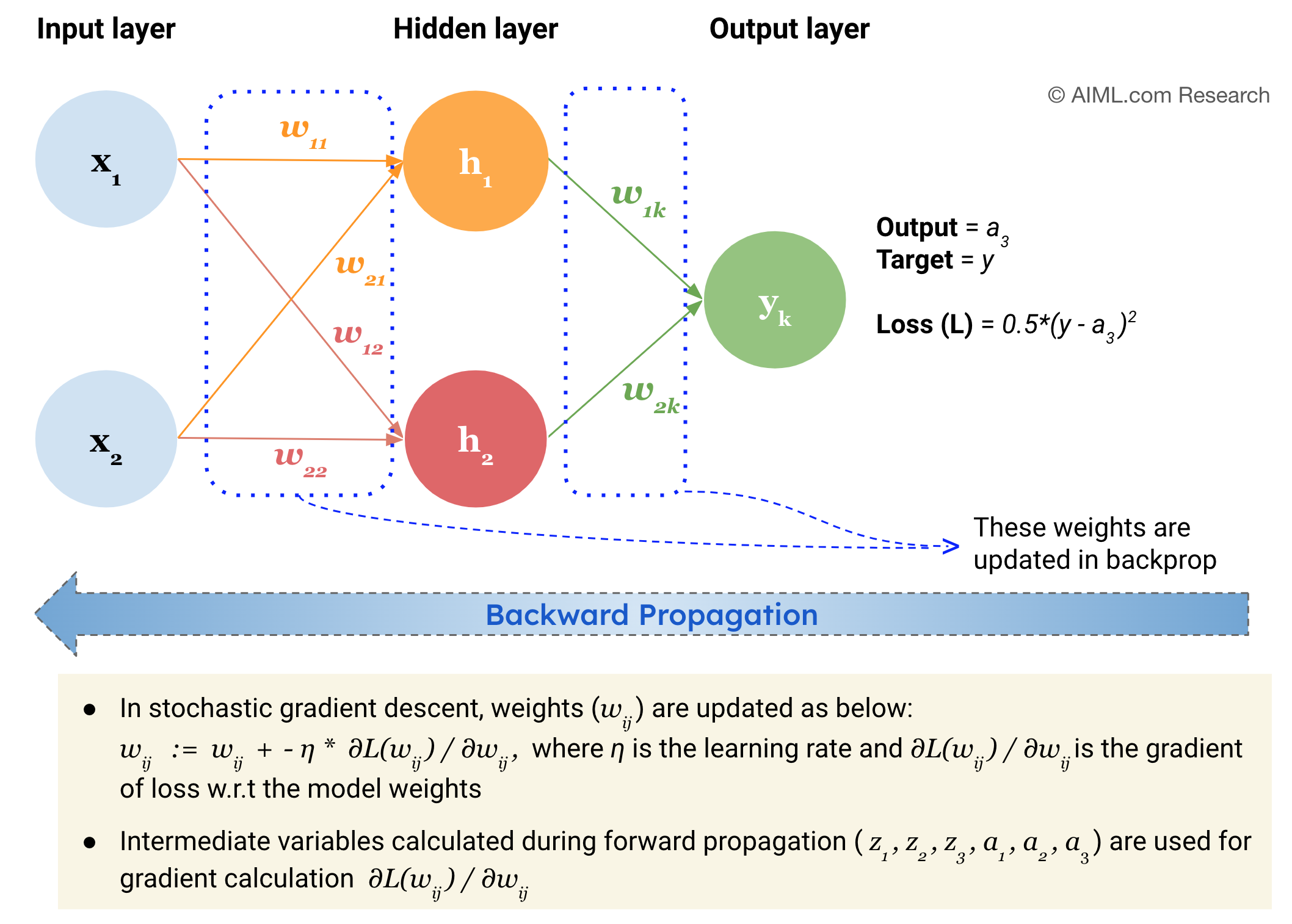

Backpropagation, short for “backward propagation of errors,” is a fundamental concept in the field of artificial neural networks, particularly in the context of training deep learning models. In Backward Propagation, the parameters of a Neural Network (i.e. weights and biases) are updated using a gradient descent optimization algorithm so that on each iteration, the gradient is one step closer to the minimum of the cost function. This minimizes the error between the predicted and the actual output thereby improving the predictive accuracy of the network.

Backpropagation consists of two main steps:

- Calculate gradients of the loss with respect to all model parameters

In the backward pass, the algorithm works backward through the network to compute the gradients of the error with respect to the weights and biases. This is done using the chain rule from calculus, which allows for the calculation of how changes in the networkʼs parameters affect the error.

- Update the network weights using an optimization algorithm

The computed gradients are used to adjust the weights and biases in the network, aiming to reduce the error (or loss). This is typically done using optimization algorithms like stochastic gradient descent (SGD) or its variants, such as Adam or RMSprop.

Backpropagation in a Neural Network

Source: AIML.com Research

The above phenomenon is referred to as Backpropagation because the process starts at the output layer and then utilizes the chain rule to calculate derivatives as it works its way backward to the input layer.

After each step of backpropagation, another step of forward propagation occurs, during which the input data is forwarded through the network using the updated weights and biases. If gradient descent is functioning correctly, the gradient of the cost function should be lower than it was before the update for every iteration.

For a complete picture of neural network training, please refer to this article: Describe briefly the training process of a Neural Network model

Video Explanation

- The 3-pack Backpropagation video series by Deep Lizard explains the intuition and mathematics behind backpropagation succinctly (Total Runtime: 33 mins)

- The 3-pack Backpropagation video series by 3Blue1Brown explains the concept of Backpropagation in more detail starting from Gradient descent, mechanics of Backprop and the math behind it (Total Runtime: 43 mins)

- If you want a hands-on understanding of Backpropagation, check out the the video by Andrej Karpathy, Former AI Director of Tesla. In this video, Andrej explains the basics of Backpropagation by working out an example on Jupyter Book. You’ll never forget Backprop once you do this. (Total Runtime: 2.5 hrs)